The Machine Learning Lifecycle: An End-To-End Look

Artificial intelligence (AI) has taken the world by storm. Individuals are applying publicly available AIs to aid them in work tasks and organizations are increasing their investment in developing and then running AIs. For most organizations that means they are working with the machine learning (ML) aspects of AI. This article overviews the machine learning lifecycle, looking at it from beginning to end (and then back again).

The Machine Learning Lifecycle: From Training to Inferences

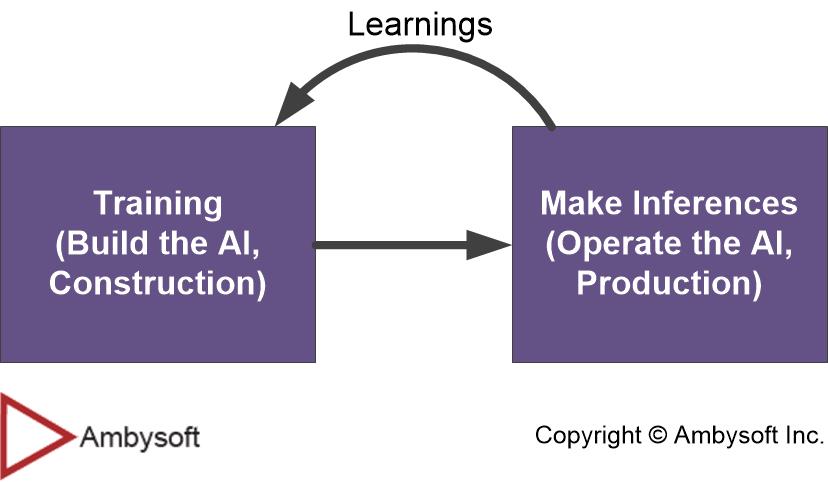

The AI literature is either incredibly dense regarding how to build AIs or incredibly sparse depending on what you’re looking for. When it comes to the ML lifecycle, it seems to lean towards the sparse end of the spectrum. Figure 1 depicts the typical level of discourse that I see in a lot of the literature: You train your models, you deploy them into production where they proceed to be used to make inferences/predictions, and any learnings are fed back into the model to improve its quality. This is a great overview of the ML lifecycle, but it’s very high level as you can see. Let’s flesh it out a bit.

Figure 1. A very high-level machine learning lifecycle.

The Machine Learning Lifecycle: End-to-End Overview

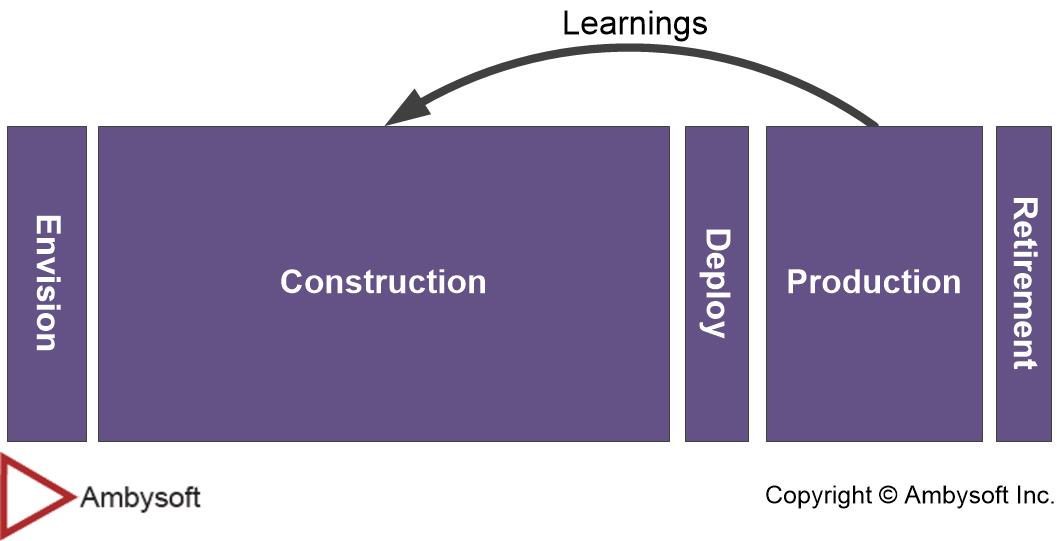

A more robust machine learning lifecycle is presented in Figure 2. This version depicts full system development lifecycle (SDLC) for ML with the following phases:

- Envision. During this phase, which hopefully lasts a few days to a week or two, your aim is to develop a vision for what you hope to achieve and how you hope to do so. You will be performing typically initiation activities such as putting the initial team together, initial modeling, initial estimation, and initial planning. Note the use of the word “initial” everywhere. In PMI’s Disciplined Agile (DA) toolkit the Envision phase is called Inception and in Scrum called “Sprint 0” (although some in the Scrum community struggles to recognize this).

- Construction. During this phase you iteratively and collaboratively build your ML model. This is likely to take weeks or even months to develop the first release of your model. Over time you may find that changes in your operational environment require you to frequently update and deploy new versions of your model(s). This phase is often called Training by AI practitioners and Development or Programming by non-AI developers.

- Deploy. This phase, better thought of as an activity, encompasses the efforts required to release your model(s) into production. I say activity because you should strive to fully automate the deployment of your model(s) via standard Data Ops techniques. This phase is sometimes referred to as Release and in the DA toolkit as Transition.

- Production. This is the period where your end users work with your AI and you in turn perform the usual operations and support of that running solution. This phases is often called Inference by AI practitioners or Operations and Support (O&S) or simply Operations in IT methods.

- Retirement. All good things must come to an end. During this phase you remove the AI from your production environment. This phase is also referred to as Decommissioning.

Figure 2. Overview of the machine learning lifecycle.

On the surface this lifecycle may appear to be be serial in nature, an approach sometimes referred to as waterfall or even “predictive”, but nothing could be further from the truth. This is made apparent by going to one more level of detail.

The Machine Learning Lifecycle: A More Detailed Look

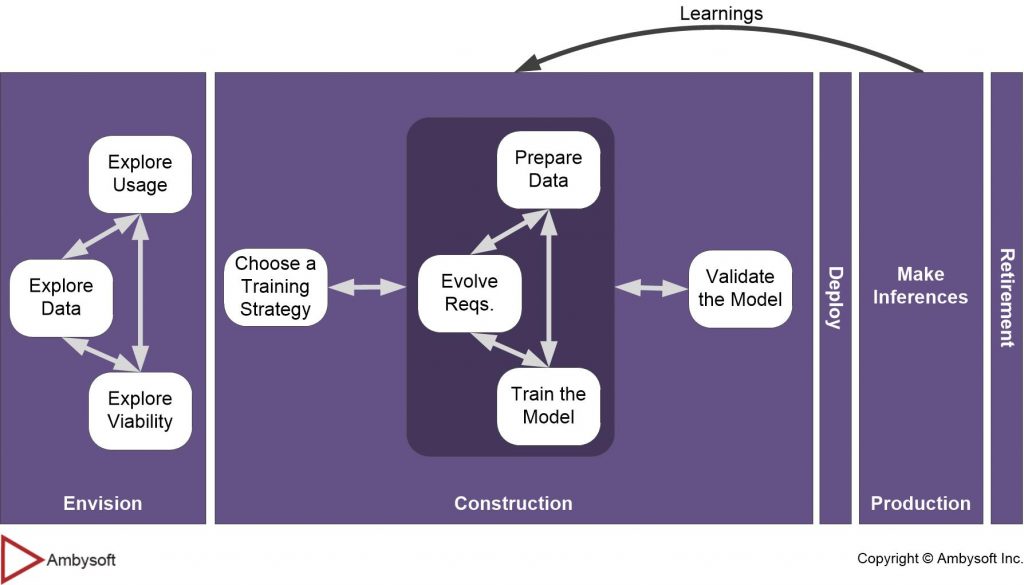

A more detailed version of the machine learning lifecycle is depicted in Figure 3. A few interesting points about this rendering of the ML lifecycle:

- It depicts only key activities. Key activities, such as Explore Usage and Make Inferences, are explicitly called out. However, many other activities – such as building your team, obtaining funding, and reporting status – are not for the sake of simplicity. The key activities are described below.

- It’s a hybrid. ML initiatives require a hybrid strategy that embodies concepts from serial, agile, and lean ways of thinking (WoT). The serial aspect can be seen in the lifecycle’s named phases, described earlier. Agile is reflected in the iterative nature of the activities within the phases, hopefully performed in a collaborative manner. Lean strategies should be applied to ensure effective flow of work.

- Existing methods don’t map well to it. Bottom line is that you need to choose your way of working (WoW).

Figure 3. A detailed machine learning lifecycle (click to enlarge).

Figure 3 calls out the following key activities:

- Explore usage. How will the end users of your AI work with it? Usage is often explore via some form of use case or user story, but frankly there are many modeling options available to you. The key issue is to understand how your end users will interact with your solution. The goal at this point is to gain a high-level understanding of what you hope to accomplish, the details will evolve as your stakeholders learn during Construction.

- Explore data. What data will be required to train your model? Early in the initiative you want to determine whether you have the data you need and its level of quality. If you don’t have some of the data you need you want to identify a strategy to obtain it or worst case to create it yourself.

- Explore viability. AI initiatives suffer from a very high failure rate, roughly 85% fail. This implies that you want to regularly ask whether or not your initiative is viable technically, economically, and operationally. Given that the answer is likely no, you either need to get the initiative on a better trajectory or cancel it.

- Choose a training strategy. Given the nature of the opportunity that you’re hoping to address, you will need to develop an overall training strategy. This includes identifying one or more candidate algorithms, platform choices, testing strategies, and perhaps initial deployment choices (see below).

- Prepare data. Data preparation often proves to be an onerous task involving data analysis, feature selection, and more often than not significant data cleansing. This activity is often called data wrangling.

- Evolve requirements. As your construction/training efforts progress you will start to identify what is possible to do, what isn’t possible, and what might be possible if only the data was sufficient. As a result your stakeholders will be motivated to evolve their requirements to reflect these new learnings.

- Train the model(s). This is a mostly automated activity with the exception of data labelling. Depending on your environment, you may find that you’re really training several models, not just one. For example, imagine an AI built to detect vehicle insurance fraud. The company may discover that it needs a collection of models to do that, perhaps one for each distinct territory that the insurance company operates in. The issue is that different laws apply in each territory, different economic environments exist, as do different cultural norms. These factors, and more, lead to very different claim behaviours on the part of customers.

- Validate the model. This is where you test your model, rating its effectiveness as well as actively exploring potential biases in the model (and there will be biases). See my blog Bias and Machine Learning: 7 Strategies for Better AI to better understand this model bias.

- Deploy. There are several deployment architectures that you may chose from. Will your model run in a centralized manner, either on the cloud or on premisesw Will it run on edge devices such as phones? Or will it run as a combination of those strategies? Regardless, you want to automate your deployment efforts appropriately, particularly if you will be deploying model updates often. My philosophy is that deployment should be automated to the extent that it is effectively zero risk with zero cost and zero friction. In short, deployment done right is a non-event.

- Make inferences. Yay, this is the fun stuff! Your end-users work with your AI solution to create value via effective (I hope!) inferences/predictions.

Where To Go To Learn More About Machine Learning

If you’re looking for details regarding AI then look no further than the 1,000+ page Artificial Intelligence: A Modern Approach by Russell and Norvig. This book is an incredible technical textbook that is a must-read for anyone who is serious about learning about how to build AIs. I have also found the O’Reilly series of machine learning books to be great resources for anyone implementing ML models.

Let Me Help

In short, I help organizations to succeed at software development. I do this by sharing my experiences and my ideas about how to be successful at software development and enterprise data. I will help you to understand and improve upon both your ways of working (WoW) and your ways of thinking (WoT), particularly around the application of artificial intelligence (AI) and agile data methods. You can learn more about what I do here.

If you are about to embark on an AI initiative, or have run aground on data quality issues, and would like the advice of an unbiased outsider, you should consider reaching out to see if I’m currently available. In the meantime, you may find my artificial intelligence blog postings insightful.